In this post, I describe porting a small application, a calculator called SpeedCrunch, to WebAssembly and some common issues that need to be addressed. I hope you may find some solutions helpful and save time should you want to target the WebAssembly platform with your code.

For those eager to see the result, the application is available here: SpeedCrunch (WebAssembly)

I have also compiled and bundled a new Windows version (with all my changes included): /files/speedcrunch/speedcrunch-0.12.1.zip

Introduction

Porting a Qt application to WebAssembly (WASM) presented some unique challenges. Qt, known for its cross-platform capabilities, initially posed difficulties when targeting WebAssembly, but improved support in later versions smoothed the process. Announced in 2015 and first released in March 2017, WebAssembly was added as an officially supported target to Qt with Qt 5.13.

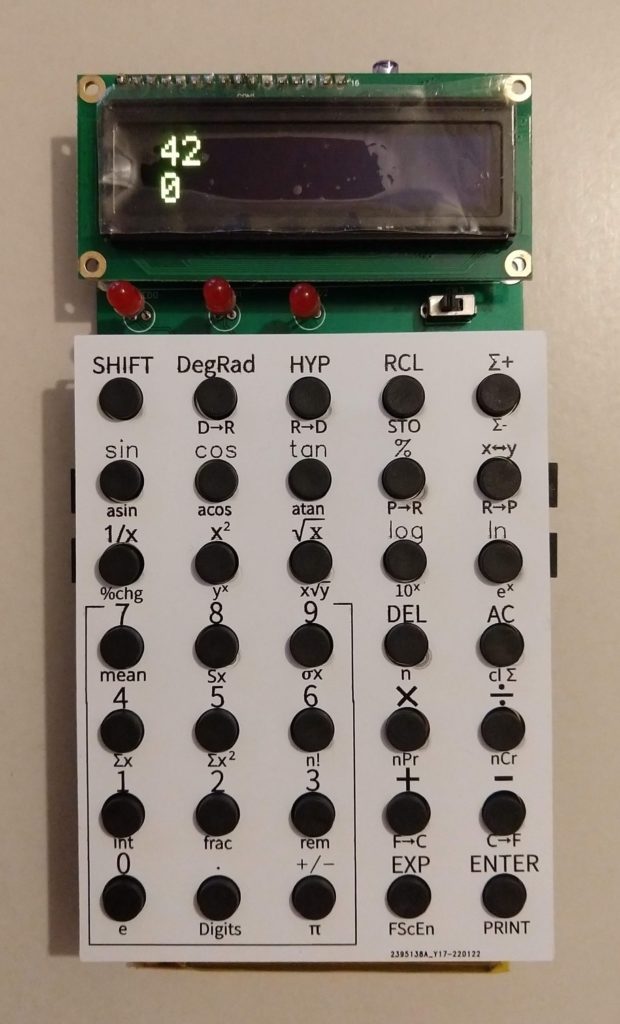

The application I wanted to port is a small but highly practical calculator called SpeedCrunch. The desktop version was my trusted companion for more than a decade, particularly for work involving binary number manipulation, thanks to its efficient binary digits editor. This feature was invaluable for working with binary representations, a common task in my professional workflow as a CPU architect. Beyond work, I also used the application for various personal calculations.

Click to enlarge the image.

Continue reading